Integrating Comparizen into Your CI/CD Pipeline

Automate visual testing as part of your build process.

Published on:

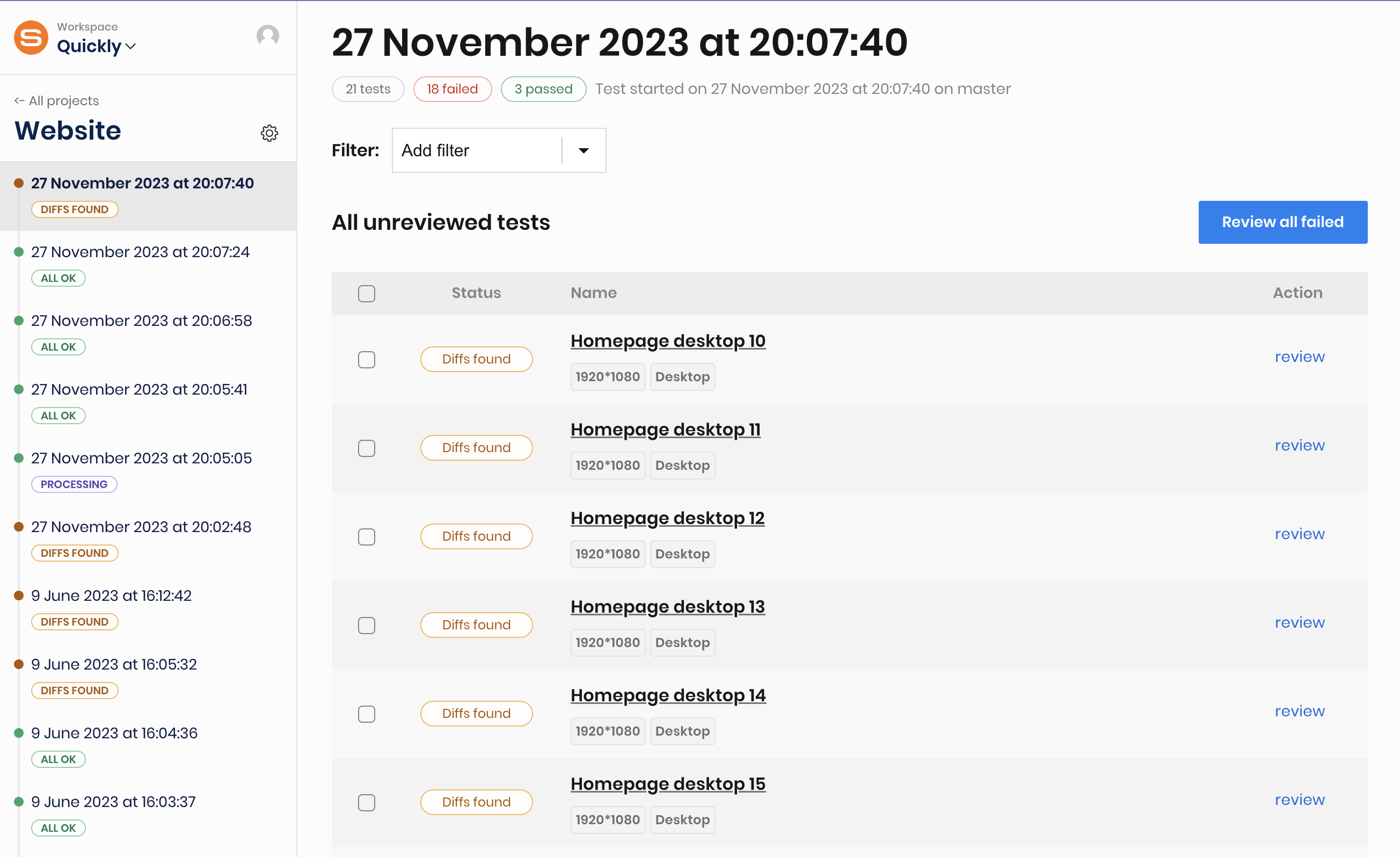

Automate your visual regression testing workflow by integrating Comparizen directly into your Continuous Integration/Continuous Deployment (CI/CD) pipeline. This ensures that visual regressions are detected automatically with every code commit, preventing UI bugs from reaching production and streamlining your development process.

Key Steps for Comparizen CI/CD Integration

While the exact implementation details depend on your specific CI/CD provider (like GitHub Actions, GitLab CI, Jenkins, Azure DevOps, etc.), the core process for integrating Comparizen visual testing involves these general steps:

- Prepare the CI/CD Test Environment: Configure your CI/CD runner environment with all necessary dependencies to execute your end-to-end test suite. This typically includes Node.js, relevant browsers (via Playwright, Cypress, etc.), and any other testing framework requirements.

- Securely Manage Comparizen Credentials: Provide your Comparizen API key and Project ID to the CI/CD environment securely. Leverage the secrets management capabilities offered by your CI/CD platform (e.g., GitHub Secrets, GitLab CI/CD variables) to avoid exposing sensitive credentials in your pipeline configuration files.

- Add Test Execution Step to Pipeline: Modify your pipeline configuration file (e.g.,

.github/workflows/main.yml,gitlab-ci.yml,Jenkinsfile) to include a step that executes your visual regression test suite command (e.g.,npm run test:visual,npx playwright test). Your Comparizen client integration (likecomparizen-playwrightorcomparizen-client) automatically handles the creation of a Comparizen Test Run, uploads snapshots during the test execution, and finalizes the run upon completion. - Process Comparizen Test Run Results: This is the core of effective CI/CD integration. Configure your pipeline to act based on the final status of the Comparizen Test Run. Your test execution step should ideally fail the pipeline build or merge request if Comparizen reports visual discrepancies (

DIFFS_FOUND) or processing errors (ERROR). Referencing the Comparizen documentation, you can use the various Test Run statuses (ALL_OK,DIFFS_FOUND,HAS_REJECTED,ERROR) to implement build gating logic. Common actions include:- Failing the CI/CD job/pipeline if the Test Run status is anything other than

ALL_OK. - Automatically posting links to the Comparizen comparison results page within the relevant pull request or merge request comments for easy review.

- Failing the CI/CD job/pipeline if the Test Run status is anything other than

Example CI/CD Configuration (Conceptual GitHub Action)

name: Visual Regression Tests

on: [push, pull_request]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Node.js

uses: actions/setup-node@v3

with:

node-version: '18' # Or your project's version

- name: Install Dependencies

run: npm ci # Use ci for faster, reliable installs in CI

- name: Run Automated Visual Tests with Comparizen

run: npm run test:visual # Example command for visual tests

env:

COMPARIZEN_API_KEY: ${{ secrets.COMPARIZEN_API_KEY }} # Securely access API key via secrets

COMPARIZEN_PROJECT_ID: ${{ secrets.COMPARIZEN_PROJECT_ID }} # Securely access Project ID via secrets (if needed by script)By embedding Comparizen visual regression testing within your CI/CD pipeline, you elevate it from a manual verification step to an automated, integral part of your quality assurance strategy, ensuring consistent visual quality with every deployment.